Sounds fantastic, a way to go. Have you managed to get this to work on WebGL as well, or is indirect draw call a requirement here?

Yes, it works on both WegGl2 and WebGPU.

Impressive work, i’m amazed.

A debug tool has been added that allows you to check which mipmap level is used for occlusion checking, as well as the pixel area.

- The verification algorithm has also been improved.

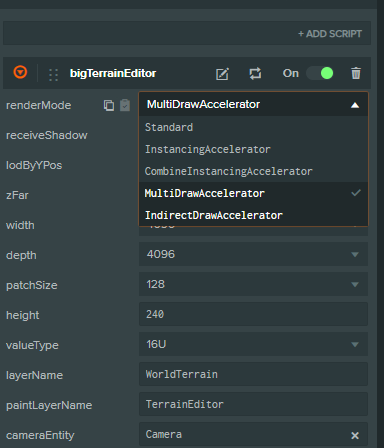

An update has been made: terrain rendering is now performed with a single draw call using the new rendering type MultiDrawInstancingAccelerator , which requires support for the GL_ANGLE_multi_draw extension. This improvement does not affect the functionality of previous rendering methods.

@see MultiDrawPatchInstancing.mts

Improved algorithm for generating MultiDraw sets.

Drawing is completed in 1 call.

We are changing the wording of IPatchInstancing to IPatchDrawAccelerator.

@see PatchMultiDrawAccelerator.mts

Special thanks to @LeXXik for help.

WebGPU demonstrates outstanding performance: rendering the landscape takes only 0.3 ms on an integrated UHD Graphics 730, maintaining a stable 75 fps.

I would not completely trust the GPU timer. Not that it’s miles off, but on WebGPU we can only measure duration of render and compute passes, but nothing else. Compared to WebGL, where the whole frame is measured, including data uploads and stall or similar.

What’s the best way to measure WebGPU performance today? Something like RenderDoc or PIX?

The up to date knowledge we have is here

This likely very much depends on how busy the GPU is. If not busy, it can be fast (3ms), but under heavy load, this will be done after the already submitted rendering is done, and take a lot longer.

I hear you … WebGL is very limited here.

Doing it in vertex shader brings disadvantages too … its hard to say if that’s worth the win in many cases, likely only in some specialized cases.

Very cool.

One solution I used in a large scale game on xbox 360 in the past was cpu occlusion. I had large quads (could be triangles too) in the level, and per frame pick few of the near ones - those are likely to provide some occlusion. Then during the CPU culling, I would also do an occlusion test (all this was on a separate thread of course, harder in JS).

Advantage vs gpu occlusion - you can even completely remove the draw call including material uniform setup and other render state.

This is something that could work well for hilly terrain, you could generate some occluders for the larger hills.

The system is designed for maximum efficiency: it uses a fragment buffer to minimize redundant computations and transmits only changes over the network within the editor, significantly reducing bandwidth usage and improving collaborative performance.

The system allows you to define a limit for the used RAM, specify when data should be offloaded to disk, and set the maximum number of changes stored in the stack.

The EntityField component is in its final stage of refinement.

The following key features have been implemented so far:

- LOD system that uses PatchesBounding as a distance selection accelerator.

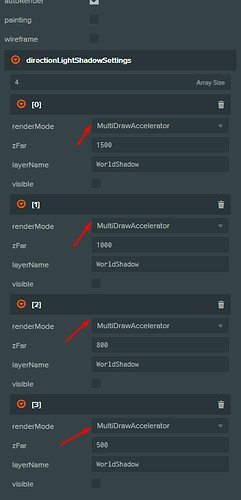

- Custom shadows with LOD support.

- Instances can have varying levels of nesting, with tinting applied to all child render components.

- Frustum and Occlusion group culling linked to terrain patches.

- Automatic height updates based on heightmap changes (can be disabled in settings).

- Editing of position, scale, and rotation for each instance.

![]() New Rendering Accelerators!

New Rendering Accelerators!

Added PatchIndirectDrawAccelerator (WebGPU only) — a powerful tool that works with hierarchical Z-buffer (HZB) and culls landscape patch rendering on the fly! By testing depth in real-time, it significantly reduces GPU load.

Optimized PatchMultiDrawAccelerator for WebGPU:

- Removed the circular texture buffer for patch data

- Now uses u32 firstInstance with packed data — simpler and faster!

Architecture supports Occlusion Queries and Readback HZB Tester:

- Work together or separately for maximum flexibility

- Readback HZB Tester: poor on Android, excellent on PC/iPhone

Shadows now support render type selection for even more optimization options!