It works! Thanks guys

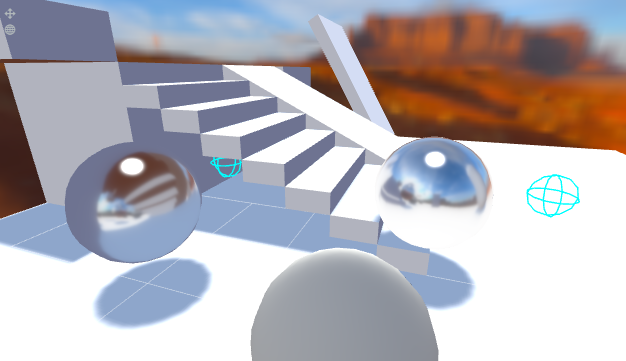

The cyan wire spheres mark the locations of ReflectionProbes, which can be seen on the nearby shiny spheres.

If it’s worth anything to anyone, here are the two scripts I ended up with. The cubemaps are rendered one at a time via a static processing queue to avoid ending up grey.

One for each Reflection Probe. No Camera is necessary, it’ll be made and deleted on the fly.

export class ReflectionProbe extends pc.ScriptType {

layers: string[];

min: pc.Vec3;

max: pc.Vec3;

envAtlas: pc.Texture;

cubeResolution: number = 64;

atlasResolution: number = 512;

boundingBox: pc.BoundingBox;

static processingQueue: boolean = false;

static renderQueue: ReflectionProbe[] = [];

static list: ReflectionProbe[] = [];

static readonly EVENT_REFLECTIONS_CHANGED: string = "ReflectionProbe_ListChanged";

static processQueue() {

if (ReflectionProbe.processingQueue)

return;

const probe = ReflectionProbe.renderQueue.shift();

if (!probe)

return;

this.processingQueue = true;

probe.render();

}

static getNearest(point: pc.Vec3) {

let minDist = Number.MAX_VALUE;

let minProbe = null;

for (let i = 0; i < ReflectionProbe.list.length; i++) {

const probe = ReflectionProbe.list[i];

const dist = probe.entity.getPosition().distance(point);

if (dist < minDist) {

minDist = dist;

minProbe = probe;

}

}

return minProbe;

}

static getFirstContaining(point: pc.Vec3) {

for (let i = 0; i < ReflectionProbe.list.length; i++) {

const probe = ReflectionProbe.list[i];

if (probe.boundingBox.containsPoint(point))

return probe;

}

return null;

}

static getFirstIntersecting(other: pc.BoundingBox) {

for (let i = 0; i < ReflectionProbe.list.length; i++) {

const probe = ReflectionProbe.list[i];

if (probe.boundingBox.intersects(other))

return probe;

}

return null;

}

initialize() {

this.boundingBox = new pc.BoundingBox();

this.boundingBox.setMinMax(this.min, this.max);

ReflectionProbe.renderQueue.push(this);

ReflectionProbe.processQueue();

}

render() {

const resolution = Math.min(this.cubeResolution, this.app.graphicsDevice.maxCubeMapSize);

const cubemap = new pc.Texture(this.app.graphicsDevice, {

width: resolution,

height: resolution,

format: pc.PIXELFORMAT_RGBA8,

cubemap: true,

mipmaps: true,

minFilter: pc.FILTER_LINEAR_MIPMAP_LINEAR,

magFilter: pc.FILTER_LINEAR

});

const cameraRotations = [

new pc.Quat().setFromEulerAngles(0, 90, 0),

new pc.Quat().setFromEulerAngles(0, -90, 0),

new pc.Quat().setFromEulerAngles(-90, 0, 180),

new pc.Quat().setFromEulerAngles(90, 0, 180),

new pc.Quat().setFromEulerAngles(0, 180, 0),

new pc.Quat().setFromEulerAngles(0, 0, 0)

];

const layers = this.layers.map((x: string) => this.app.scene.layers.getLayerByName(x)?.id);

const cameraEntities: pc.Entity[] = [];

for (let i = 0; i < 6; i++) {

const renderTarget = new pc.RenderTarget({

colorBuffer: cubemap,

depth: true,

face: i,

flipY: true

});

const e = new pc.Entity("CubemapCamera_" + i);

e.addComponent("camera", {

aspectRatio: 1,

fov: 90,

layers: layers,

renderTarget: renderTarget

});

cameraEntities.push(e);

this.entity.addChild(e);

e.setRotation(cameraRotations[i]);

e.camera.onPostRender = () => {

if (i === 5) {

this.envAtlas = new pc.Texture(this.app.graphicsDevice, {

width: this.atlasResolution,

height: this.atlasResolution,

format: pc.PIXELFORMAT_RGB8,

mipmaps: false,

minFilter: pc.FILTER_LINEAR,

magFilter: pc.FILTER_LINEAR,

addressU: pc.ADDRESS_CLAMP_TO_EDGE,

addressV: pc.ADDRESS_CLAMP_TO_EDGE,

projection: pc.TEXTUREPROJECTION_EQUIRECT

});

pc.EnvLighting.generateAtlas(cubemap, {

target: this.envAtlas

});

cubemap.destroy();

for (const cameraEntity of cameraEntities) {

cameraEntity.camera.enabled = false;

cameraEntity.camera.renderTarget.destroy();

cameraEntity.camera.renderTarget = null;

cameraEntity.destroy();

}

ReflectionProbe.list.push(this);

ReflectionProbe.processingQueue = false;

ReflectionProbe.processQueue();

this.app.fire(ReflectionProbe.EVENT_REFLECTIONS_CHANGED);

}

};

}

}

};

pc.registerScript(ReflectionProbe, ReflectionProbe.scriptName);

ReflectionProbe.attributes.add("layers", {

type: "string",

array: true,

default: ["World", "Skybox"]

});

ReflectionProbe.attributes.add("min", { type: "vec3", default: [0, 0, 0] });

ReflectionProbe.attributes.add("max", { type: "vec3", default: [1, 1, 1] });

And one for any Model whose MeshInstances should use the generated EnvAtlas cubemaps.

import { ReflectionProbe } from "../Rendering/ReflectionProbe";

export class ShaderReflectionProbe extends pc.ScriptType {

continuous: boolean;

useNearest: boolean;

interval: number = 250;

intervalID: number;

initialize() {

this.on("destroy", this.onDestroy, this);

this.app.on(ReflectionProbe.EVENT_REFLECTIONS_CHANGED, this.onReflectionsChanged, this);

}

onDestroy() {

clearInterval(this.intervalID);

this.off("destroy", this.onDestroy, this);

this.app.off(ReflectionProbe.EVENT_REFLECTIONS_CHANGED, this.onReflectionsChanged, this);

}

onReflectionsChanged() {

clearInterval(this.intervalID);

this.getProbe();

if (this.continuous) {

this.intervalID = setInterval(this.getProbe.bind(this), this.interval);

}

}

getProbe() {

if (!this.entity?.model)

return;

for (const meshInstance of this.entity.model.meshInstances) {

const probe = this.useNearest ? ReflectionProbe.getNearest(meshInstance.aabb.center) : ReflectionProbe.getFirstContaining(meshInstance.aabb.center);

const envAtlas = probe?.envAtlas ?? null;

// @ts-ignore

meshInstance.material.envAtlas = envAtlas;

meshInstance.material.update();

}

}

};

pc.registerScript(ShaderReflectionProbe, ShaderReflectionProbe.scriptName);

ShaderReflectionProbe.attributes.add("continuous", { type: "boolean", default: false });

ShaderReflectionProbe.attributes.add("useNearest", { type: "boolean", default: false });

Thanks

Thanks